An exhaustive set of notes about logging

Lessons I’ve learned over the years about the invaluable, but occasionally painful tool.

Lessons I’ve learned over the years about the invaluable, but occasionally painful tool.

“record of observations, readings, etc.,” originally “record of a ship’s progress,” 1842, sailor’s shortening of log-book (1670s), the daily record of a ship’s speed, progress, etc., which is from log (n.1) “piece of wood.” — https://etymonline.com/word/log

“Logging” or “logs” are a standard part of the developers toolkit. In the simplest way, logging statements are simply expressions that a program has hit a point of execution. For example, the following program logs “Hello World” in the main() function:

include <stdio.h>int main (int argc, char* argv[]) {

printf("Hello world!\n");

return 0;

}Arbitrary logs are regularly inserted into the program by developers during development looking to match the programs execution flow against its actual flow, to find faults.

However, beyond this simple ad-hoc mechanism of expressing program state, there are much larger uses and various semi-standards for logging

The many different species of log

Because a “log” is simply a recording or reading, there are many different types of logs. There are:

Access / audit logs

Access logs are created (“logged”) during the standard execution of software when a given protected resource is accessed. They record some information such as:

Who accessed a resource

When did they access this resource

What resource did they access

Whether that access was granted

They’re designed to provide a mechanism to trace whether a given user is supposed to access or modify a given resource and when they did so. Such logs are extremely useful in “Digital Forensics and Incident Response” (DFIR) to determine whether any unauthorised users were able to access resources they should not have.

An example of this log would be:

2018-07-25 09:49:58.818 UTC: User "andrew.howden@sitewards.com" added firewall "website--public" to the network "default"Error Logs

Error logs are logged when it’s determine that the software has hit an unexpected condition, and cannot proceed. Something like:

The browser is not telling a website what it is, but the website is trying to vary it’s results based on the website.

The application attempted to connect to the database, but failed

The application attempted to execute a query against the database, but the database returned a result indicating the query was invalid

Unexpected conditions are an extremely common condition in software. Software is an extremely complex set of “conditional statements”, in which many different things are expected to be just so, and when they’re not the software chokes.

To assist in finding these software failures, developers will anticipate the conditions in which the application will fail and add logging statements such that if the software does fail, the failure will be logged and the developers will be able to later determine when and why the software failed.

An example of this log would be:

2018/08/09 16:35:05 [crit] 10326#10326: *21732375 SSL_do_handshake() failed (SSL: error:1408A0D7:SSL routines:ssl3_get_client_hello:required cipher missing) while SSL handshaking, client: 12.345.67.89, server: 0.0.0.0:443Debugging Logs

Unfortunately, there are some bugs which even the most experienced developers cannot anticipate. When one of those bugs reported, it’s extremely difficult to get an understanding of how the application got into the state in which it failed without the application telling the developer what’s happening internally.

Debugging logs are lots of significant state changes in an application. They’re usually disabled, but can be enabled optionally. When they are enabled they provide key insights into how an application arrives at a given logical decision, which can assist the developer in understanding how the application got into the state in which it failed.

An example of debugging logs are as follows:

2018/08/09 17:39:55 [debug] 20051#20051: *1 http process request line

2018/08/09 17:39:55 [debug] 20051#20051: *1 http request line: "GET / HTTP/1.1"

2018/08/09 17:39:55 [debug] 20051#20051: *1 http uri: "/"

2018/08/09 17:39:55 [debug] 20051#20051: *1 http args: ""Logging “well”

Given that logging is such a simple and elegant solution to understanding state, there are a bizarre number of ways to “log”. However, over time systems administrators have arrived at a number of lessons to make understanding logs much easier, especially in the context of multiple systems.

Below are some of the lessons I’ve been lucky enough to have been taught or discover:

Independence

When writing a log, it’s extremely tempting to write it with the context that you understand to be the case when writing it, or with the context of other logs that you’ve recently written. Imagine for example that you must write a PHP application in which you send an email:

<?phpecho 'Sending an email';$ret = mail('hello[at]andrewhowden.com','Legit Mailz', 'I am legit mails');if ($ret !== true) {

echo 'The mail failed.';

}You could imagine this will end up like:

# /dev/stdout:1-2Sending an email

The mail failedHowever, practically this is often not the case for several reasons:

There may be several different versions of this mail script running at the same time

The contents of the log might be aggregated into a log aggregator with many other logs

The logs might arrive out of order

A log might not arrive at all

It’s tempting to imagine logs as a reliable debugging tool, but practically they’re not. It’s very rare to fail an application entirely if logs can’t be written, so it’s more likely the application will simply try to log as best it can, and if that doesn’t work, proceed anyway.

Accordingly, it’s important to write our logs so that they can be understood without the context of any other log. Given the example above, we can restructure it like:

<?phpecho sprintf('Sending an email with the id "%s" to "%s", $mailId, $destination;$ret = mail('hello[at]andrewhowden.com','Legit Mailz', 'I am legit mails');if ($ret !== true) {

echo sprintf('The mail with id "%s" to "%s" failed: mail() returned false', $mailId, $destination);

}This allows a much more detailed log that tolerates out of order messages, messages going missing or being aggregated with other logs:

The mail with id "5b6c66c879f4d" to "hello[at]andrewhowden.com" failed: mail() returned false

...

${OTHER_LOGS}

...

Sending an email with the id "5b6c66c879f4d" to "hello[at]andrewhowden.com"Structured

There are certain classes of information that are extremely useful when attempting to determine the cause of a given error. Later in this article we’ll cover an opinionated format that defines certain information that should be included in logs, but for now it’s enough to say that it’s worth using a logging tool that renders information in a structured format with additional metadata. Such metadata might include:

The time of the issue

How severe the issue is, according to the Syslog severity standard.

The service that generated the log

The hostname that the service was executing on

The request id

The user id

As well as many other forms of meta data.

In the example above we used a simple echo statement to generate our logs. However, in PHP there is an extremely popular logging library called “Monlog”. Adjusting our example before to:

<?phprequire_once __DIR__ . '/vendor/autoload.php';$logger = new \Monolog\Logger("log");

$logger->pushHandler(new Monolog\Handler\StreamHandler('/dev/stdout', \Monolog\Logger::DEBUG));$ret = mail('hello[at]andrewhowden.com','Legit Mailz', 'I am legit mails');if ($ret !== true) {

$logger->info(sprintf('The mail with id "%s" to "%s" failed: mail() returned false', $mailId, $destination));

}products the much more complete log:

[2018-08-09 11:18:14] log.INFO: The mail with id "5b6c66c879f4d" to "hello[at]andrewhowden.com" failed: mail() returned false {"file":"/home/andrewhowden/Development/php/bork.php"} []This log is much easier to correlate against other reports of a bug by customer service or other team members, and provides the detail about in which file the issue occurred.

Using standard logging libraries is often a great way to gain a large amount of logging knowledge for limited effort. They generally provide good APIs with which to record error, debug or audit logs, and can be plugged in to many log storage processes.

Rated by severity

Not all logs are equal. We cannot know ahead of time which information will prove valuable, nor is all information of the same sematic value. The log:

The application has encountered a critical failure: cannot connect to the databaseis much more important than:

The application has sent an email to `hello[at]andrewhowden.com`Accordingly, when users search through logs to determine the cause of an issue, they want to be able to see only information of a given severity.

The syslog standard was released in 2009, and provides some guidance as to how to refine a log into a given set of severity levels:

Emergency: system is unusable

Alert: action must be taken immediately

Critical: critical conditions

Error: error conditions

Warning: warning conditions

Notice: normal but significant condition

Informational: informational messages

Debug: debug-level messages

These levels are an extremely common representation of how severe logs should be, and are well supported by various log systems. Practically, I find only “Info”, “Warning”, “Error” and “Emergency” (or “Fatal”) levels useful, though there are some that use even fewer.

Given our example above, we can set the levels appropriately with the Monolog library:

$logger->info(sprintf('Sending an email with the id "%s" to "%s"', $mailId, $destination));$ret = mail('hello[at]andrewhowden.com','Legit Mailz', 'I am legit mails');if ($ret !== true) {

$logger->error(sprintf('The mail with id "%s" to "%s" failed: mail() returned false', $mailId, $destination));

}Will produce:

[2018-08-09 11:38:28] log.INFO: Sending an email with the id "5b6c66c879f4d" to "hello[at]andrewhowden.com" [] []

[2018-08-09 11:38:28] log.ERROR: The mail with id "5b6c66c879f4d" to "hello[at]andrewhowden.com" failed: mail() returned false [] []which can be filtered down with grep 'log.ERROR' to:

[2018-08-09 11:38:28] log.ERROR: The mail with id "5b6c66c879f4d" to "hello[at]andrewhowden.com" failed: mail() returned false [] []Making it much easier to find the application errors. It’s also worth noting that one should take care and consideration marking software issues as errors. If the error can be ignored, it’s not an error. If everything is an error, nothing is.

Simple

It’s tempting to consider how logs will be viewed when writing log information. Given the followng example:

// This code is a bad idea for other reasons; it's only a logging

// example

$oImporter = new Importer();echo ('Starting importer');

$oImporter->importCategory(['categoryId' => 1, 'categoryContent' => [...]);

echo '→ Imported category with id "1"';will result in

Starting importer

→ Imported category with id "1"Which is easier to read. However, logs are not only consumed directly in a file, or on a screen. The additional formatting which makes it easier to read makes it much more difficult to parse when you’re looking at the logs in aggregate:

# $ cat import.log | grep 'category'

→ Imported category with id "1"

→ Imported category with id "15"

→ → → → category failed: invalid category contentLogs are consumed in a variety of different ways across different systems both by humans and by software analysing the logs. By making as few assumptions as possible when writing the logs, other tools do not have to be modified to parse our custom format:

$ cat import.log | grep 'category'The importer started processing category with the id "1"

The importer started processing category with the id "15"

The importer failed to process the category with the id "1": The category content failed validation (content was not of the type 'array')This gives a much clearer understanding of the logs and allows us to debug issues more rapidly.

Stupid

When writing logs, it can be tempting to also handle some additional log meta-management in the application. Things like:

Reading back logs

Rotating logs

Deleting logs after a certain time

Pushing logs to a specific file or socket

Log to multiple streams

Counter-intuitively, this handling often introduces additional complexity in to the log management for systems administrators that is difficult to overcome. More specifically,

Reading back logs can introduce vulnerabilities into the application such as XSS, as well as leak session or other secret data.

Rotating logs can interfere with other tools that do this same task in unpredictable ways, or the log rotation can break with large files.

Log deletion may happen on a schedule that’s not expected by the log management team

Logging to multiple streams indicates that logs are sometimes unavailable in a centralised pool, or if they are so may be duplicated.

Most modern Linux systems do an excellent job of handling the two information stream “STDERR”, which is designed for exactly this sort of software metadata. Given software run by:

Systemd, the logs will end up in the

systemd-journaldwhere they can be read back withjournalctlDocker/Kubernetes, the logs will end up in

/var/log/containers(or where configured), and can be read back withdocker logs

Other software management solutions equally have sane mechanisms to handle the STDERR stream.

Audience specific

Logs invariably have an implied audience in mind when the’re being written. Consider the following log:

Failed to connect to upstream server 17.235.12.1 (443) server refused the connection (SYN-RST)A developer with knowledge of TCP internals may be able to read this log and understand immediately that the machine likely tried to connect over HTTPS but was actively refused. However, consider the following user facing log:

This message is entirely unhelpful to users that are simply browsing a website, and have hit unanticipated behaviour. The log, though correct, is unhelpful. Accordingly, when writing a log it’s worth writing it explicitly for the understanding of those consuming it:

This gives those who are consuming the error an understanding of what happened, why it happens and what to do to resolve it; it’s a much more useful log.

It’s still worth logging the aforementioned TCP connection failure, but in a developer facing log and not directly to the consumers.

Relevant

Lastly, in terms of guidelines for writing logs, it’s important to understand when not to log. Logs are extremely useful to convey various parts of non-structured application state; the key phrase there being “non-structured”.

There are common patterns to analyse applications, such as:

A numerical representation to how often the application has done some task

A call-graph profile of time the application spent doing a given task

In both those cases there are tools that are designed explicitly to pull out and manage that information: Time series data and transaction tracing respectively. These are both excellent approaches that yield often more useful and actionable information than logs, but that require an extremely rigid approach to export the data.

Logs are not the right approach to express the application state if it can be formalised in another, more structured approach. In a sense they’re the “last alternative” for determining application state.

Aggregating

The above guidelines about logging make the implication that logs will be consumed in a variety of ways. More specifically, logs from many different applications will be collected together in a single tool for review and analysis by either other software or a systems administrator.

On-machine aggregation

The process of centralising logs for simpler review is one that has been in development for many years and has a number of stable protocols that make it simple to log to a centralised, managed service.

Perhaps the most prevalent logging interface is the “syslog” standard. This standard, mentioned earlier in the discussions about “log levels”, is implemented on just about every Linux machine in some capacity. It’s extremely common for system services to either log to syslog directly or allow logging to syslog; the following applications all support it with only minor changes in configuraiton:

Indeed, it’s difficult to find applications that do not implement this logging specification.

The syslog specification has been been implemented by a number of different tools, but in most modern systemd based Linux distributions is handled by a service called systemd-journald. This journal allows both writing to syslog via a syslog compatible interface and reading back from the logs via the journalctl command. The journal even allows filtering and sorting of the logs in various ways, and fails back to the shell when the filtering isn’t natively supported by the journal:

# Get the logs from the Linux kernel

$ journalctl --identifier=kernel# Get the logs from the systemd "prometheus" service

$ journalctl --identifier=prometheus# Get the logs from the osqueryd service after midday

$ journalctl --since=12:00:00 --identifier=osqueryd# Get all the logs from all applications of

# syslog level "Error" (3) or higher:

$ journalctl --priority 3As well as a host of other filtering options. A full list of these options is available in the journald man page.

Aggregation on machine is a useful “first step” to getting all logs in a centralised place for querying and understanding what’s happening on a machine. It is an extremely reilable, self managing service that does not require much input from the application developer aside to configure the application to write via the syslog protocol.

Off-machine aggregation

As powerful as the systemd-journald application is, it’s still difficult to understand logs in their entirety. The journal does not allow easy understanding of complex logs, does not allow an overview of problems that affect multiple hosts and it requires access to the machine directly. Accordingly, once machine level logging has been implement it’s a good idea to transport the logs from this single machine into an aggregation service.

There are many different aggregation tools, services and implementations that form a log aggregation stack. Some of the most popular implementations are:

The ELK stack (FOSS)

The EFK stack (FOSS)

Graylog (FOSS)

logz.io (Hosted)

Loggly (Hosted)

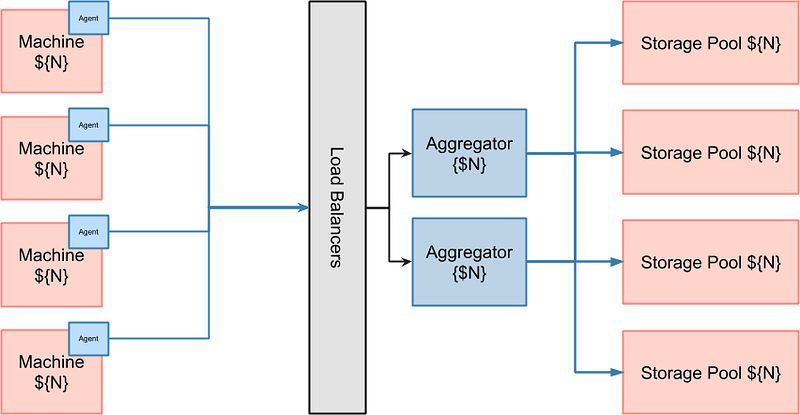

They all follow approximately the following architecture:

An agent is installed on each each machine which reads back and sends the logs (via a load balancer) to applications that are designed to receive, transform and insert those logs into a storage pool. The logs can later be read back by any software that implements the storage pool API.

Regardless of whether you choose to implement the services, there should be a common set of things that you should be looking for:

Use standard software. There are open source solutions to many of these problems, and using the “standard” tooling prevent many silly problems that have already been solved. The canonical agent is “fluentd”

Encrypt connections. While logs should be safe, it’s not uncommon for secret information or other such data to leak into logs. Should this be sent over an unencrypted connection, there’s a chance that an intermediary will log or modify the information themselves and the information becomes compromised.

Use the infrastructure. Tooling around logging has been around for a tremendously long period of time, and there are thus standard ways of handling it that are embedded into most operating systems. By using those instead of custom protocols or binaries logs will be much more reliable and predictable, and costs of tooling will drop.

However, this broad approach does not provide immediate guidance as to which tools to use. As part of ongoing project work, the stack I would recommend is the following:

The agent: the fluentbit agent is the successor to the fluentd agent, and is the tool designed to read back and send logs. It’s open source, owned by the Cloud Native Computing Foundation (CNCF, or Linux Foundation) and designed to minimise complexity, responsibility and dependencies. Broadly, it’s a stupid simple log forwarder.

The aggregator: Fluentd/Fluentbit are both suitable mechanisms for log aggregation, though fluentd has a larger amount of output plugins and greater support by the community (as it’s older). It includes a plugin for Stackdriver logging, which is important because:

The storage pool: Stackdriver logging (from Google Cloud) provides an extremely generous free tier of 50gb per month, which allows at least the demonstration of a proof of concept for the costs of of logging. Beyond 50gb, it could be reevaluated depending on the costs and difficulty hosting an ElasticSearch cluster, but this is independent of the initial implementation.

This implementation has not been tried yet, but should be rolled out in the next few weeks.

Binary formats

Log aggregation buys us some tremendously valuable properties when dealing with logs. Centralised view of logs, access control lists, multi-hosts logs, automated detection of issues are all super nice properties of using such an aggregated system. However, perhaps the best part of an aggregation system is the powerful querying interface that many of them provide.

If we consider a log as an expression of a set of properties about a system, we should be able to query logs that match a given set of properties. Consider the log:

::1 - - [15/Apr/2018:18:18:56 +0200] "GET /favicon.ico HTTP/1.1" 404 200 "http://localhost/" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.181 Safari/537.36"It is a semi-structured text blob containing:

The IP that made a connection

The date the request occurred

The request itself

The result of the request (404)

The referrer

Information about the user who requested the page

We can parse this fairly standard structure to get a list of all requests that included “/favicon.ico”, or “404” or any other property. However, practically it’s fairly difficult to do this. Formats aren’t well defined and in many case text parses break when trying to understand them. Additionally, some information is missing from this log — is it an Apache log or an NGINX log?

One mechanism to address this difficulty in parsing such logs is to simply encode them by default in a machine readable format. Given the above log, we could structure it:

{

"ip": "::1",

"date": "15/Apr/2018:18:18:56 +0200",

"request": "GET /favicon.ico HTTP/1.1",

"result": 404,

"referrer": "http://localhost",

"useragent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.181 Safari/537.36"

}This allows machines to easily understand each property of the log. Instead of needing to parse the result with some sort of custom text parser, the processor can use the well defined JSON spec:

$ cat log.txt | jq '. | select(.result == 404)'{

"ip": "::1",

"date": "15/Apr/2018:18:18:56 +0200",

"request": "GET /favicon.ico HTTP/1.1",

"result": 404,

"referrer": "http://localhost",

"useragent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.181 Safari/537.36"

}Binary logging thus makes it much easier to understand the logs en masse at the cost of making them slightly more difficult to read in the command line.

In my mind, this is a tradeoff that is well worth making. At worst, it’s possible to restructure the results of the structured log back into the line based format with a command line tool jq:

$ cat log.txt | jq '. | select(.result == 404) | "[\(.date)] \(.request) \(.result) \(.useragent)"'"[15/Apr/2018:18:18:56 +0200] GET /favicon.ico HTTP/1.1 404 Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.181 Safari/537.36"At the cost of this readability we purchase the ability to search many millions of logs for specific criteria, such as a request Id, result code, IP address or other unique identifier. It is additionally possible to aggregate and deduplicate structured logs in a way that is difficult with the non-structured alternatives.

Expressing logs binarily

Application support

Most applications will support JSON directly, or with minimal configuration. Even if an application does not support expressing logs in JSON it will usually support expressing a custom plain text format that can be rendered as JSON.

The following links show how to configure their respective applications for JSON logging

Aggregator support

In the case the application does not support JSON logging directly, often times the aggregator such as fluentd or logstash can modify the logs such that they’re in the format required.

Implementing this support depends on the aggregator itself, but usually involves parsing the log with some sort of regular expression or modification plugins to add the required data.

The recommended fluentbit forwarder is capable of this sort of transformation.

One format to rule them all

Binary formats are excellent, but it is difficult to reconcile application logs across many different software packages unless they follow some sort of standard log format. Unfortunately, there does not appear to be one (binary) log format that has become more popular than the others, so we’re forced to develop our own.

To make this as sane as possible, the following is a structured reference that I’ve been developing for a couple of years, and implementing where I get time. Further work may refine this specification, but this article should be kept up to date.

The following fields are expected to be in all log entries following the format as defined by this article:

Time

{ "time": "2015–05–01 15:00:00+02:00" }The time is a string representation of the exact time of the log. It should be expressed in the format defined by RFC 3339. For example:

2015–05–01 15:00:00+02:00

An an ideal world, machines should all operate in UTC however practically it’s common to find machines configured to the local timezones (such as desktops). In all cases, the logs should include the date.

This is designed to allow correlating multiple logs, perhaps across different software stacks with the same event. Often events are externally reported by users or other team members rather than by automated software analysis, and it’s only after the report that users go digging into the logs to determine what happened.

Version

{ “version”: “3.0.0” }The specification as defined in this article has changed many times, and will continue to be refined and developed into the future. As it’s changed, this version number is updated.

Environment

{ "environment": "development" }This is an arbitrary string that defines in what sort of environment this application is being run in. In most environments this would be one of:

Development

Testing

Staging

Production

And is designed to allow filtering of logs that are, in a sense, designed to fail (such as those in development and testing).

Host

{ "host": "sw-20160616-01.desktops.corp.sitewards.net" }The human-readable identifier (fully qualified domain name or FQDN) for the machine that the service was running from. For example, the FQDN of my laptop is:

sw-20160616-01.desktops.corp.sitewards.netA web node might be:

www1.sitewards.deThis is designed to allow identifying the host from where the log was generated so that if the issue arises from some reconfiguration on the host, that host can be identified and fixed.

Service

{ "service": "nginx" }The service is the application that generated this log. Some examples might include:

NGINX

Redis

Magento

Or any other application name. It is not the command line of the process specifically, but rather a human readable representation of the application name.

This is designed to allow determining issues that are affecting certain specific software packages.

Process ID

{ "pid": 12830 }The process id is the numeric id assigned to any given process such that it can be referenced by other processes. It’s designed to allow reconciling the application that generated the log with other system analysis tools such as OSQuery or the Linux audit subsystem. It additionally allows developers to identify the exact process that is generating the logs, such that they can go and instrument the process more fully with eBPF or strace to determine what’s happening with that process.

Request ID

{ "request_id": "094c20c2–9caa-11e8–905d-efdf3de6ade0" }A request id should be assigned whenever an external request hits an application, and should be propagated and logged with all other applications that are touched following the generation of the request id. The format should be the defined UUID standard, for example:

094c20c2–9caa-11e8–905d-efdf3de6ade0

20536672–9caa-11e8-a628-ef8251eee850

209eb3d4–9caa-11e8-aecc-5f896deeb6f5

This is designed to allow reconciling the same issue across multiple applications in a distributed

Type

{

"type": "web",

"payload": {

"status_code": 404,

"user_id": "bcf30456-9caa-11e8-806b-f715afce833c",

"request_url": "/",

"request_method": "GET",

"remote_addr": "127.0.0.1"

}

}This specifies the “type” of log. It’s used to define the format of the content in the “payload” section, which can vary based on what application the log comes from.

At the time of writing, there are no specds for type defined, but some examples in the form “type”: “payload fields” include:

Web (1.0.0): status_code, user_id, request_url, request_method, remote_addr, host

Audit (1.0.0): success, user_id, remote_addr

Error (1.0.0): severity, remote_addr

Magento (1.0.0): severity, store_code, request_method, request_uri, remote_address,file,line_number,message

Debug: message

Payload

{

"type": "cake_service",

"payload": {

"version": "1.0.1"

"cake": "cheeze",

"tuesday": "friday"

"dragon": "yes"

}

}The payload is where the arbitrary data goes. The specific format should be indicated by the type; in the case the type is not known, simply make one up.

The payload is versioned just as the structured log format is such that even if the type format is a made up one, it’s clear when that made up format changes and queries need to be adjusted to understand the old and the new format separately.

Accordingly, the only fields required for payload are version, and a type should exist.

Example

The following provides an example of an error log of the format described above, and how it can be parsed. For example, given the log:

{

"time": "2018-06-30 16:04:54+02:00",

"type": "error",

"hostname": "0f47a4aa2aac",

"pid": "9",

"service": "apache2",

"payload": {

"severity": "notice",

"message": "core: AH00094: Command line: /usr/sbin/apache2 -D FOREGROUND",

"version": "1.0.0"

},

"environment": "production",

"version": "2.0.0"

}with the command:

echo "${LOG}" | jq '. | "[\(.time)] \(.severity) | pid: \(.pid) | \(.payload.message)"produces:

"[2018-06-30 16:04:54.009287] notice | pid: 9 | core: AH00094: Command line: /usr/sbin/apache2 -D FOREGROUND"In Conclusion

Logs provide an extremely valuable source of data about application state, and can significantly reduce the cost of investigating and resolving issues. With time, lessons such as the way in which to format the logs, usage of aggregation systems, usage of logs to determine and enforce other policy have contributed to further reducing the ongoing operational cost of software.

Note

I wrote this article up as something of a definitive guide. It will be updated over time.

Thanks

Sitewards. This article took a day to write, and they were kind enough to give me the opportunity.

Antonius, for reading and review

Matthew Beane, Aaron Bonner and Anton Siniorg for input about logging

Zeno Pensky for waiting until this is done, and for reading it

Francis M. Gallagher, Rian Orie, Douglas Radburn, Brandon Cole.

This article took many, many hours to write. During the time of writing various people were kind enough to encourage me to continue. In deference to them, here’s a dragon ninja:

,===:'., `-._

`:.`---.__ `-._

`:. `--. `.

\. `. `.

(,,(, \. `. ____,-`.,

(,' `/ \. ,--.___`.'

......... , ,' ,--. `, \.;' `

| Yo I | `{D, { \ : \;

| am | V,,' / / //

|totally > j;; / ,' ,-//. ,---. ,

| NINJA | \;' / ,' / _ \ / _ \ ,'/

````````` \ `' / \ `' / \ `.' /

`.___,' `.__,' `.__,'Art by VZ